In everyday life we experience many limitations on energy transfers. A hot bowl of soup becomes cooler, for example, but a cool bowl of soup never spontaneously heats up. The second law of thermodynamics is one of the fundamental theories of nature that explains this (along with many other things). The most intuitive statement of the second law of thermodynamics is that heat tends to diffuse evenly: heat flows from hot to cold. Heat is a quantity of energy (joules, calories, etc.). The quantity of heat energy thus depends directly on the amount of material the system contains.

Temperature is however a relative term: Two objects are at the same temperature if no heat energy flows spontaneously from one object to the other. Every temperature scale requires two reproducible reference points. At absolute zero, the kinetic energy of atoms and molecules is zero. A consideration of heat versus temperature leads us to another important property of materials—the heat capacity. Every material has the capacity to store heat energy, but some substances do this better than others. Think about placing a pound of copper and a pound of water on identical burners. Which one heats up more quickly?

Heat capacity is the amount of heat energy that a substance can hold. Water has a much higher heat capacity than copper. A useful measure of the heat capacity is called “specific heat,” the energy required to raise the temperature of 1 gram by 10 C. For water, this amount of energy is defined as the calorie. By contrast, it takes only 0.1 calorie to raise a gram of copper by a degree.

The second law of thermodynamics depends on the motion of heat. Heat can move by three different mechanisms: conduction, convection, and radiation. Conduction is the transfer of heat from atom to atom in a solid object. It is the transfer of heat through a moving fluid, either a liquid or a gas. Radiation is the transfer of heat by a form of light that travels 186,000 miles per second. Insulation in animals, in clothing, and in houses is designed to reduce the inevitable transfer of heat. Most heat loss comes from convection. Fur and fiberglass insulation trap pockets of air so small that convection can’t occur.

Thermos bottles carry this strategy a step further by having a vacuum barrier, across which neither conduction nor convection can occur. The second law of thermodynamics applies to so many physical situations that there are a number of very different ways to express the same fundamental principle. A second, subtler statement of the second law is that an engine cannot be designed that converts heat energy completely to useful work. Thermodynamics were of immense importance to designers of steam engines, so many theoretical insights came from this practical device.

The French military engineer Nicolas Sadi Carnot (1796–1832) came the closest to deriving the second law from a study of work and heat. Carnot considered two sides of the relationship between work and heat. He recognized that work can be converted to heat energy with 100% efficiency. It is possible to convert the gravitational potential of an elevated object, or the chemical potential of a lump of coal, completely to heat without any loss. Converting heat to work is more restricted. Inevitably, some heat winds up heating the engine and escaping into the surroundings. You can expend work to raise water and fill a reservoir; with care, without losing a drop. But if water is released to produce work, some of the water has to flow through the system.

Carnot’s great contribution was the derivation of the exact mathematical law for the maximum possible efficiency of any engine—that is the percentage of the heat energy that can actually do work. The maximum efficiency of an engine depends on two temperatures: T hot, the temperature of the hot reservoir (burning coal, for example), and T cold, the temperature of the cold reservoir of the surroundings into which heat must flow (usually the air or cooling water). An engine is a mechanical device imposed between two heat reservoirs. In this sense, an engine is any system that uses heat to do work— the Sun, the Earth, your body, or a steam engine.

In Carnot’s day, before these principles were understood, typical steam engine efficiencies were 6%. Today, improved insulation and cooling have raised efficiencies of coal-burning power plants to 40%, which is close to 90% of the theoretical limit. Carnot’s equation illustrates why fossil fuels— coal, gas, and oil—are so valuable. These carbon-rich fuels burn with an extremely hot flame, thus elevating the temperature of the hot reservoir and increasing the maximum efficiency.

Part 2: Entropy

As scientists of the 19th century thought about the implications of energy, they eventually came to a startling realization—the great principle that every isolated system becomes more disordered with time. In this essay I’m going to introduce the concept of entropy—the tendency of systems to become messier quite spontaneously, in spite of everything we do.

The discussion of the second law of thermodynamics thus far has focused on the behavior of heat energy: Heat flows spontaneously from warmer to cooler objects, and an engine cannot be 100% efficient. As useful as these ideas may be, the second law reaches far beyond the concept of heat. In its most general form, the second law comments on the state of order of the universe. All systems in the universe have a general tendency to become more disordered with time. Many experiments can be designed to study this phenomenon.

Shuffle a fully ordered deck of cards and it becomes disordered, never the other way around. Shake a jar of layered colored marbles and they become mixed up never the other way around. These examples are analogous to a situation where a hotter and colder object come into contact. The heat energy of the hotter material gradually spreads out. In each of these cases the original state of order could be recovered, but it would take time and energy.

The concept of entropy was introduced in 1865 by the German physicist Rudolf Clausius (1822–1888) to quantify this tendency of natural systems to become more disordered. Clausius synthesized the ideas of Carnot, Joule, and others and published the first clear statement of the two laws of thermodynamics in 1850. The second law, however, was not presented in a rigorous mathematical form. Clausius realized that for the second law to be quantitatively useful, it demanded a new, rather abstract physical variable called entropy. He defined entropy purely in terms of heat and temperature: entropy is the ratio of heat energy over temperature.

Clausius observed the behavior of steam engines and realized that this ratio must either remain constant or increase that is, the heat divided by the temperature of the cold reservoir is always greater than or equal to the heat divided by the temperature of the hot reservoir (or heat divided by work). Thus the entropy of a system does not decrease.

One can summarize the two laws of thermodynamics as energy is constant (first law), but entropy tends to increase (second law). A more intuitive approach to entropy is obtained by thinking about heat energy as the kinetic energy of vibrating atoms. Heat spreads out because faster atoms with more kinetic energy collide with slower atoms; eventually, the kinetic energy averages out. Ultimately, the order of any system can be measured by the orderly arrangement of its smallest partsits atoms. A crystal of table salt with regularly repeating patterns of sodium and chlorine atoms is highly ordered. A lump of coal, similarly, has an ordered distribution of energy-rich carbon-carbon bonds. Dissolve the salt in water or burn the coal and energy is released, while the atoms’ disorder entropy increases.

The “arrangement” of atoms can also refer to the distribution of velocities of particles in a gas i.e., its temperature. Imagine what happens when two reservoirs of gas at different temperatures are mixed. Temperature averages out and the entropy or the randomness increases. This definition of entropy was placed on a firm quantitative footing in the late 19th century by the German physicist, Ludwig Boltzmann (1844–1906). Boltzmann used probability theory to demonstrate that, for any given configuration of atoms, entropy is related to the number of possible ways you can achieve that configuration. The most probable arrangement is observed. Entropy is the microscopic manifestation of probability. Boltzmann is now considered one of the greatest physicist of all time, though during his life time, majority of the Physics community did not accept his theories, eventually worsening his preexisting depression, and culminating in his suicide later; only few decades later his ideas would be not only broadly accepted, but his ideas of the probabilistic nature of atomic behavior would form the basis of modern quantum mechanics.

Cosmic Implications of Second Law and Entropy:

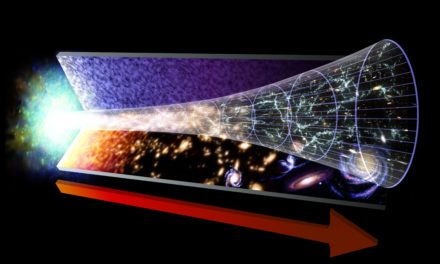

The second law of thermodynamics has far-reaching consequences. One consequence of the second law of thermodynamics is that heat in the universe must eventually spread out evenly. Life on Earth is possible because the Sun provides a steady source of energy, and the Earth holds a vast reservoir of interior heat. In the 1890s, many scholars addressed the concept of the “heat death” of the universe.

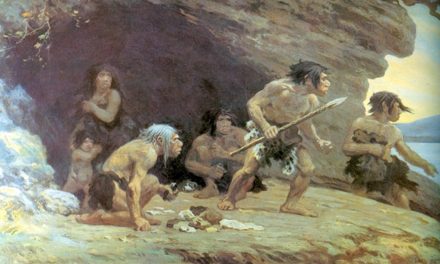

The second law of thermodynamics provides us with tantalizing insights about the nature of time. Imagine playing a favorite movie backwards. Some forms of motion, such as a ball flying through the air, seem completely reversible. Other images are obviously impossible in the real world. The tendency of a system’s entropy to increase define the arrow of time. Living things must obey the laws of thermodynamics, which come directly into play with the concept of trophic levels. Every organism must compete for a limited supply of energy. Plants, in the first trophic level, get their energy directly from the Sun. Herbivores, the second trophic level, obtain energy from plants, but about 90% of the plant’s chemical energy is lost in the process. The second law of thermodynamics helps to explain why top predators like lions and killer whales are relatively rare.

The second law of thermodynamics is often invoked by creationists to prove that life could not have evolved from nonlife. Living things are exceptionally ordered states. Such an unimaginably ordered system could not possibly arise spontaneously. Locally ordered states arise all the time. Every time a plant grows, new highly ordered cells are formed. One question that science has not yet fully addressed is the tendency for highly ordered complex states, such as life, to arise locally. Perhaps, someday, there will be another law of thermodynamics.

Any kind of work – chemical or otherwise, ultimately depends on having two (or more) interactions between a hotter and a colder location. Life itself is also a product of such a heat quotient, from the thermodynamic in equilibrium between the sun and the earth, allowing us to decrease entropy locally, at the expense of a global increase in entropy. However, a very very long time in to the future, the universe could end in what is described dramatically as “heat death of the universe”. The ‘heat-death’ of the universe is when the universe has reached a state of maximum entropy. This happens when all available energy (such as from a hot source) has moved to places of less energy (such as a colder source). Once this has happened, no more work can be extracted from the universe. Since heat ceases to flow, no more work can be acquired from heat transfer. This same kind of equilibrium state will also happen with all other forms of energy (mechanical, electrical, etc.). Since no more work can be extracted from the universe at that point, it is effectively dead, especially for the purposes of humankind or any kind of intelligent life. It will occur because according to the second law of thermodynamics, the amount of entropy in a system must always increase. As discussed above, the amount of entropy in a system is a measure of how disordered the system is – the higher the entropy, the more disordered it is.

It is sometimes easier to imagine if you think of an experiment on earth. A chemical reaction will only occur if it results in an increase of entropy. Let us imagine burning petrol. We start off with a liquid that contains atoms arranged in long chains – fairly ordered. When we burn it, we create a lot of heat, as well as water vapor and carbon dioxide. Both are small gaseous molecules, so the amount of disorder of the atoms in their molecules has increased, and the temperature of the surroundings has also increased.

Now let’s think what this means for the universe. Any reaction that takes place will either result in the products becoming less ordered, or heat being given off. This means at some time far in the future, when all the possible reactions have taken place, all that will be left is heat (i.e. electromagnetic radiation) and fundamental particles. No reactions will be possible, because the universe will have reached its maximum entropy. The only reactions that can take place will result in a decrease of entropy, which is not possible, so in effect the universe will have died.

The good news is this eventual heat death of the universe is so far in to the future, and the time scales truly unimaginable. One of the last objects in the universe to disappear would be gigantic black holes holding the center of galaxies but even these humongous black holes could eventually disappear through the so-called Hawking radiation. The decay time for a super massive black hole of roughly 1 galaxy-mass (10^11 solar masses) due to Hawking radiation is on the order of 10^100 years, so entropy can be produced until at least that time. After that time, the universe enters the so-called Dark Era, and is expected to consist chiefly of a dilute gas of photons and leptons. With only very diffuse matter remaining, activity in the universe will have tailed off dramatically, with extremely low energy levels and extremely long time scales. Speculatively, it is possible that the universe may enter a second inflationary epoch, or, if the current vacuum state is a false vacuum, the vacuum may decay into a lower-energy state. It is also possible that entropy production will cease and the universe will eventually reach heat death. Possibly another universe could be created by random quantum fluctuations or quantum tunneling in roughly 10^{10^{10^{56}}}} years. These are time scales so long, there are not enough atoms in the universe to even write them down.